Researcher: How to spot AI-generated videos

"Every time a new model is launched, it feels even more realistic," says Gabriele de Seta.

Recently, you may have come across an online video that left you wondering whether it was real or fake.

OpenAI, the company behind ChatGPT, recently launched a new video and audio generation model called Sora 2.

This AI tool is a text-to-video model that learns by connecting words and images using massive datasets.

Sora can, for example, generate a video of a cat baking a cake by looking at data of cats, cakes, and people baking.

"It combines these elements to create a surprisingly convincing result, even though no real videos of cats baking cakes exist," says Gabriel de Seta.

He studies artificial intelligence at the University of Bergen.

Feels more realistic

The newest AI videos look incredibly realistic. How is that possible? According to de Seta, it's because developers have fine-tuned the model to mimic real-life formats.

The model imitates selfie videos, surveillance cameras, and funny clips that have gone viral on social media.

"Because it feels familiar, it becomes more believable," he explains.

De Seta also points to what he calls the novelty effect.

"Every time a new model is launched, it feels even more realistic. Since we're not used to the new tweaks, it's easier for us to be fooled," he says.

"A bit like getting vaccinated"

AI technology is advancing rapidly, and de Seta believes some videos are now so realistic that it’s almost impossible to tell they’re artificially generated.

"But those videos still take a lot of work. If you only give the model simple prompts, the results can be inconsistent," he says.

Most viewed

De Seta explains that most AI-generated humans and animals still move somewhat unnaturally, and objects can change shape or position when the camera moves.

He adds that most AI videos are only a few seconds long. Longer ones are often stitched together from several short clips.

"These are clues that a video might be AI-generated," the researcher says.

However, he warns against relying too much on such clues, as the technology is improving fast.

De Seta's best advice for learning to spot AI videos is to try the tools yourself.

"By playing around with them and seeing what they can do, you'll get better at recognising the videos they produce. It's a bit like getting vaccinated," he adds.

Brings new challenges

As AI tools continue to develop, so do concerns about their role in spreading disinformation.

De Seta explains that AI models have been used to spread false information for years, though research shows they’re not necessarily more effective than traditional methods.

"What's certain, however, is that AI creates a lot of uncertainty," he says.

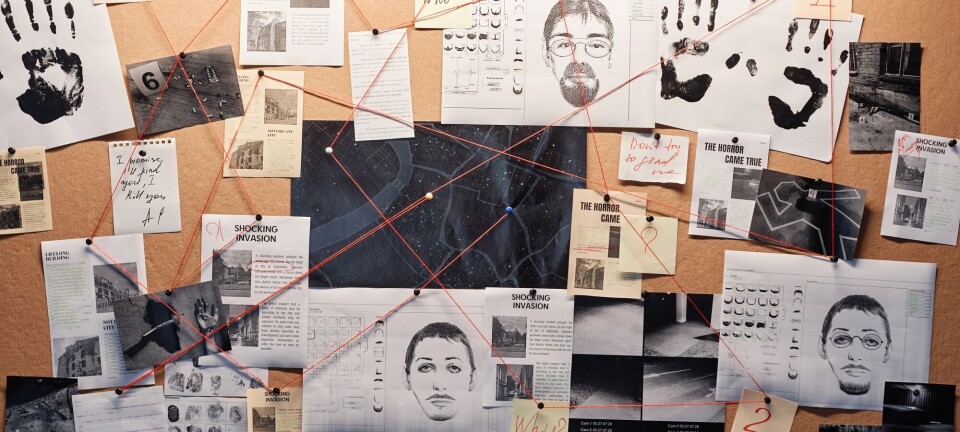

De Seta describes a phenomenon researchers call 'the liars dividend.'

"The fact that everything could potentially be fake means that audiences have to spend more time and effort verifying content. So far, AI hasn't been particularly successful at spreading disinformation, but it's been very good at spreading doubt and suspicion instead," says de Seta.

———

Translated by Alette Bjordal Gjellesvik

Read the Norwegian version of this article on forskning.no

Related content:

Subscribe to our newsletter

The latest news from Science Norway, sent twice a week and completely free.